Logic Gates in Neural Networks: Digital Electronics Meet Machine Learning

Logic gates, fundamental in digital electronics, form the basis for neural network functionality. Th…….

Logic gates, fundamental in digital electronics, form the basis for neural network functionality. These interconnected nodes mimic binary inputs and outputs, enabling complex pattern recognition. Types like AND, OR, NOT, and XOR gates process data within hidden layers, controlling information flow and enhancing model performance. Training algorithms and activation functions optimize patterns, introducing non-linearities akin to logic gate operations. This synergy empowers neural networks to excel in tasks like image classification, natural language processing, and predictive analytics, revolutionizing various fields through advanced logical operations.

Logic gates, fundamental building blocks of digital electronics, are making waves in neural networks, revolutionizing their architecture and performance. This article delves into the intriguing world where analogies between digital circuits and neural network layers intersect. We explore how logic gates, traditionally used in hardware, translate to enhancing computational abilities in artificial neural networks (ANNs). By understanding these connections, we open a path towards more efficient models, improved training processes, and ultimately, better AI applications powered by logic gate innovations.

- Understanding Neural Networks: The Basic Building Blocks

- Introduction to Logic Gates in Digital Electronics

- Mapping Analogies: How Logic Gates Relate to Neural Networks

- Types of Logic Gates and Their Functions within Neural Network Layers

- Training and Activation Functions: Enhancing Logical Operations

Understanding Neural Networks: The Basic Building Blocks

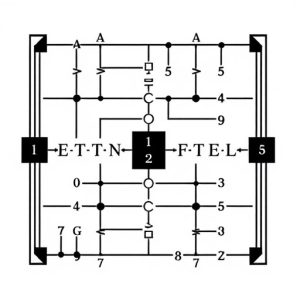

Neural networks, the backbone of modern artificial intelligence, are intricate systems inspired by the human brain’s neural connections. At their core, these networks consist of interconnected nodes or neurons, organized into layers. Each neuron receives inputs, performs calculations using weighted sums and activation functions, and outputs a signal to the next layer. This process mimics the transmission of electrical signals in biological neurons, allowing the network to learn and make predictions.

These neural networks are constructed using fundamental building blocks known as logic gates. Similar to traditional digital electronics, where AND, OR, and NOT gates form the basis for complex circuits, logic gates in neural networks facilitate basic computational operations. In deep learning models, these gates process and transform inputs, introducing non-linearities that enable the network to learn complex patterns and relationships within data. By combining and manipulating these logical operations, neural networks can perform tasks such as image classification, natural language processing, and predictive analytics with remarkable accuracy.

Introduction to Logic Gates in Digital Electronics

In the realm of digital electronics, logic gates play a fundamental role as building blocks for complex circuits. These elementary units are responsible for performing basic logical operations, such as AND, OR, and NOT, on binary inputs to produce specific outputs. Logic gates have revolutionized the way we process information, enabling the development of computers, smartphones, and other digital devices that have become integral parts of our daily lives.

By combining different logic gates, circuits can be designed to execute more intricate operations, leading to the creation of complex systems like neural networks. In neural networks, these logic-gate principles are adapted to mimic biological neural pathways, facilitating machine learning and artificial intelligence applications. Understanding logic gates is thus crucial for grasping the inner workings of modern technology and advancing fields reliant on digital signal processing.

Mapping Analogies: How Logic Gates Relate to Neural Networks

Logic gates, a cornerstone of digital electronics, play a surprisingly analogous role in neural networks, the building blocks of modern AI. Just as logic gates use binary inputs and outputs to perform computational tasks, neural networks process information through layers of interconnected nodes that transmit either positive or negative signals, akin to on or off states. This mapping of analogies is fundamental to understanding how these two seemingly disparate technologies share a common goal: efficient data processing.

In neural networks, logic gates manifest in the form of activation functions and weighted connections. Activation functions determine whether a neuron fires or remains silent, mimicking the switch-like behavior of logic gates. Weighted connections between neurons act like variable strengths of input signals, allowing for more nuanced decision-making processes akin to complex logical operations. This analogy underscores the power of neural networks in learning intricate patterns from data, making them versatile tools for various applications, from image recognition to natural language processing.

Types of Logic Gates and Their Functions within Neural Network Layers

In neural networks, logic gates play a pivotal role in processing and transforming data within hidden layers. These gates are fundamentally similar to their digital electronics counterparts, but with a twist tailored for learning and adaptation. The primary types include AND, OR, NOT, and XOR gates, each serving distinct functions. For instance, the AND gate outputs 1 only if both inputs are 1, enabling gating of relevant information flow. Conversely, an OR gate activates when at least one input is 1, facilitating the aggregation of diverse features.

NOT gates invert the input signal, acting as a form of negation that can be useful for correcting errors or introducing non-linearities. XOR gates, on the other hand, output 1 only when inputs differ, allowing for the detection of contradictions or distinct patterns. These logic gates are strategically placed within neural network layers to learn and manipulate complex relationships in data, ultimately contributing to improved model performance.

Training and Activation Functions: Enhancing Logical Operations

Training and activation functions play a pivotal role in enhancing logical operations within neural networks, akin to how logic gates function in traditional computing. During training, algorithms adjust weights and biases based on error backpropagation, allowing the network to learn and optimize complex patterns. This process mirrors the adjustment of gate values in digital circuits, enabling the network to perform intricate decision-making tasks.

Activation functions introduce non-linearity, enabling neural networks to model complex relationships. Functions like ReLU, sigmoid, and tanh activate neurons based on specific thresholds, facilitating the learning of diverse logical patterns. By combining these activation functions with tailored training procedures, neural networks can effectively emulate the behavior of logic gates, enhancing their capacity to solve problems across various domains.